Forget about dusty old maps and boring stats – imagine navigating the ever-changing jungle of enterprise software development! It’s like discovering hidden tribes of people who code in modern programming languages (Python! Kotlin!), use cutting-edge CI/CD tools – Jenkins, CircleCI and work in big teams and have years of experience bringing an idea to life from the ground up by writing the most optimised code. It’s like building magical castles in the cloud.

That’s where we’re headed, adventurer! We’ll trek through Silicon Valley’s glittering skyscrapers, sneak into Bangalore’s secret startup dens, and even chill by the beach with coders from Africa brewing the next big tech revolution. No region is off-limits! Along the way, we’ll decode the whispers of rising tech trends – AI whispering innovation to your data, blockchain building invisible fortresses, and old giants like Java shaking hands with nimble new stars like Swift. We’ll peek into everyone’s toolbox, from open-source bazaars to enterprise treasure chests, and maybe even borrow a cool gadget or two.

All this, based on our most recent pulse report, Q3, 2023, which you can find here. But before that, if you are a professional developer or know someone who is, consider participating in our ongoing 26th Developer Nation survey and contribute to the optimisation of the developer experience.

Enterprise Development isn’t just about gadgets and gizmos. This is about the passionate humans behind the code – the keyboard warriors battling bugs, the dreamers sketching the future, and the masterminds building software that’ll change the world (one line at a time!). Learning about enterprise developers is essential for a holistic understanding of software development, especially in large organizations where the challenges and requirements are distinct from those of smaller projects. This knowledge can benefit various stakeholders, from business leaders and project managers to individual developers and technology enthusiasts.

So, grab your coding backpack, your adventurous spirit, and your insatiable curiosity. It’s time to rewrite the jungle rules, one bug fix, one feature update, one innovative idea at a time.

Regional Disparities

While regions like South Asia hold a scant 9.5% share of the world’s enterprise developers, North America, Western Europe, and Israel stand as towering giants, each wielding around 31% and 28.6% of the talent pool, respectively. This chasm in geographical distribution begs the question: what factors have sculpted such an uneven landscape?

Disparity in software development likely stems from socioeconomic and economic factors. Developed economies have better educational resources and established tech ecosystems, fostering a critical mass of skilled developers. Thriving tech hubs in other regions attract talent with promising careers and salaries while nascent ecosystems struggle to compete, hindering talent growth.

The stark disparities in the distribution of enterprise developers highlight the need for concerted efforts to bridge the digital divide and create a more equitable global tech landscape. By investing in human capital, fostering collaboration, and promoting inclusive growth, we can unlock the full potential of technology for all corners of the world.

Technology Preferences

The technological preferences of enterprise developers paint a vivid picture of the industry’s driving forces. Web development and backend tasks reign supreme, captivating a whopping 82% of the developer pool. This focus reflects the ever-expanding web ecosystem and the crucial role of robust backend infrastructure in powering modern applications.

While web and backend rule the roost, mobile development and artificial intelligence (AI) are carving their own niches. With their ubiquitous presence in our daily lives, mobile apps attract roughly 35% of developers, driven by the ever-evolving mobile landscape and the insatiable demand for user-centric experiences. AI, though still in the early stages of enterprise adoption, holds the attention of around 33% of developers, hinting at its immense potential to revolutionise various sectors.

Industry Spotlight: Software and Finance Lead the Way

Beyond technologies, the industries drawing developer interest are equally revealing. Software products and services take the crown, with nearly 40% of developers gravitating towards this dynamic domain. This affinity stems from the constant churn of innovation and the fast-paced nature of the software world. Financial services and banking, with their complex data landscapes and growing reliance on technology, come in a close second at 21.6%, showcasing the increasing convergence of finance and technology.

These trends signify a close interplay between developer preferences and industry needs. The prevalence of web and backend development aligns seamlessly with the software and financial sectors’ demand for a robust online presence and advanced data processing. Simultaneously, the growing interest in mobile and AI mirrors the increasing importance of user engagement and data-driven insights across various industries.

Understanding these connections provides valuable insights into the future of enterprise development. The emphasis on web, mobile, and AI is expected to strengthen, driven by both developer enthusiasm and industry demands. As these technologies advance, the software and financial sectors will likely stay ahead, attracting and fostering top developer talent.

CI/CD Practices

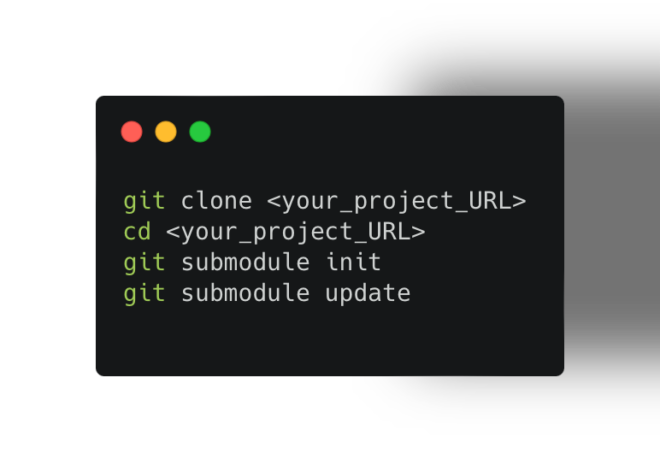

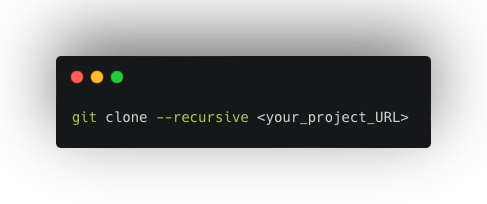

As the software development lifecycle evolves, Continuous Integration and Continuous Deployment (CI/CD) practices have become indispensable. Jenkins emerges as the dominant force in this arena, enjoying a staggering 66.5% usage. GitLab’s self-hosted version follows suit, while IBMCode and TeamCity trail as smaller players. Notably, Jenkins is popular in organizations with over 1,000 employees, with self-hosted GitLab closely behind at 37.2%. Azure Pipelines, IBM UrbanCode, and TeamCity cater to smaller segments of the market.

Containerization and Cloud Services

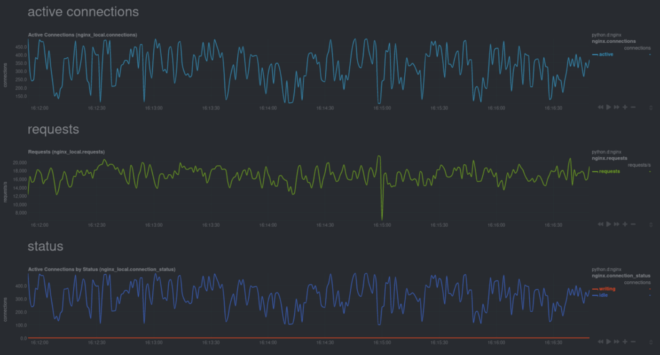

The age-old frustration of “It works on my machine but not yours” has become a relic of the past, thanks to containerisation technologies like LXC and Docker. These container technologies are especially favoured by backend developers, commanding an impressive 61.8% usage. Database-as-a-Service (DBaaS) is also prominent at 34.6%. In the backend developer’s toolkit, cloud monitoring services are vital, with 23.7% usage.

DevOps Tooling

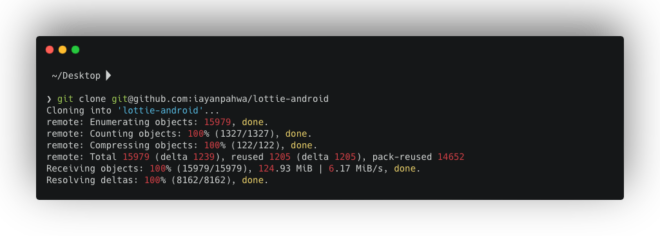

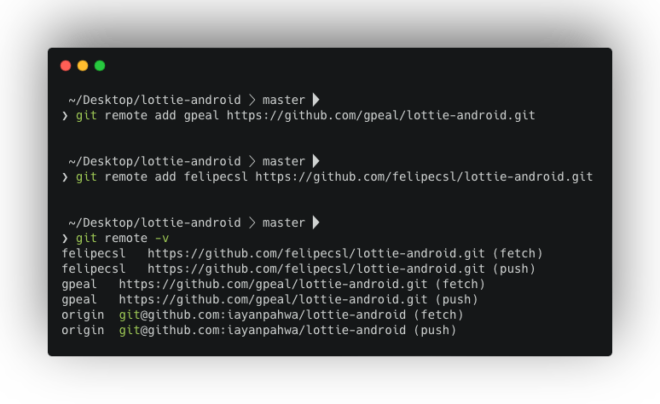

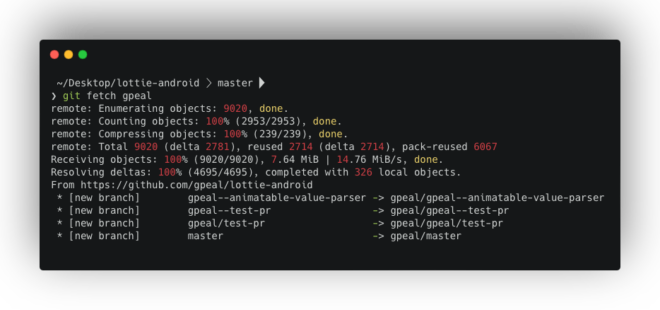

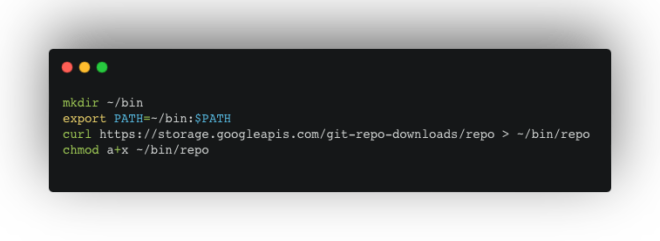

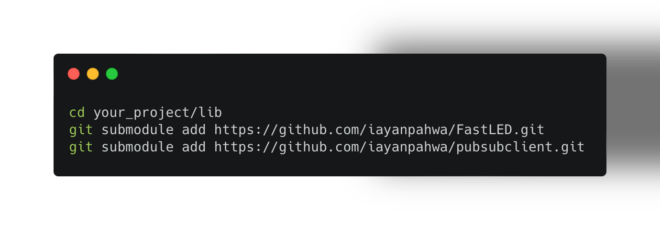

In the DevOps domain, GitHub is the leader, commanding a substantial 28% usage. Google Cloud Developer Tools follow closely at 13.8%, while AWS CodeCommit lags with just around 8% usage. These statistics underline the importance of collaboration and version control in the modern software development landscape.

Conclusion

The enterprise development world is dynamic and shaped by regional influences and technological preferences. As we navigate the evolving landscape, it is clear that specific tools and practices have become integral to the development lifecycle. Whether it’s the dominance of Jenkins in CI/CD or the widespread adoption of containerisation technologies, staying informed about the trends is essential for developers and businesses alike. As we move forward, anticipating and adapting to these shifts will be key to thriving in the ever-changing enterprise development world.

If you are an enterprise developer, I’d love to connect with you personally and learn more about your work and day-to-day challenges and how Developer Nation and SlashData can help you from our decades of experience in Developer Market Research and community building. Please reach out to me at ayan.pahwa@slashdata.co or on social media. Cheers!

– @iAyanPahwa